When I was in second year of university, I took the “Programming Languages” course and learned the functional languages Oz and Haskell. Almost everyone in the class hated them and their weird syntax, but they grew on me. I haven’t used them since but I remember when I went back to my co-op job at Chalk doing C#/ASP .Net, I felt inadequate. Well, I didn’t feel inadequate - my tools did.

The Collections libraries in .Net were approximations of the platonic idea of lists I had learned in Oz and Haskell. They seemed like cheap imitations that offered workarounds and, while I could work with them, they felt clumsy.

At this point, I got very interested in Linq and started using lambas all over my code. Finally, I thought, I could use lists in C#! I did some research, and the lambdas I was writing had their origin in something called “Lambda Calculus.” I did more research and eventually wrote an essay on the concept of lambas in .Net. It fascinated me that code could be represented as a data structure that I could manipulate at runtime. It intrigued me as a programmer but it seemed like playing with fire, and I didn’t pursue the idea any further.

I did professional development in other languages, mostly C and Objective-C, for the next two years. Last year, I learned to use blocks (anonymous Objective-C functions) and I rejoiced! I finally had lambdas in my favourite language! Since then I’ve been using blocks throughout my code. I started looking for places to use them in code and have since moved on to thinking in terms of these blocks. I don’t really know how I got along without them.

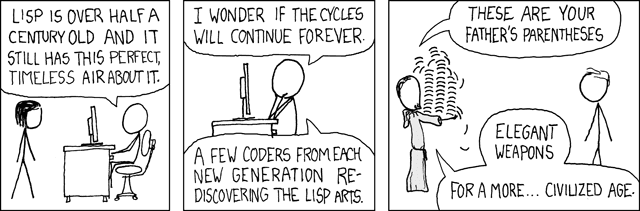

Fast forward to present day: I’m reading Hackers and Painters by Paul Graham. Which is to say that I’ve decided to learn Lisp. Learning it has taken me back to lambas in C#.

I feel the same way I did two years ago learning Objective-C. I was so used to static typing in Java and C# that using the dynamic typing in Objective-C seemed … dangerous. Treating code like data seems the same way. My inner pragmatist - a C coder, no doubt - is terrified of buffer overflows and the difference between unsigned and signed integers.

Trying to change the way you think is a challenge. I’m worried about having to readjust to not writing in such high-level code, but I can always willingly narrow my focus; I can’t willingly broaden it without learning something new.

Please submit typo corrections on GitHub